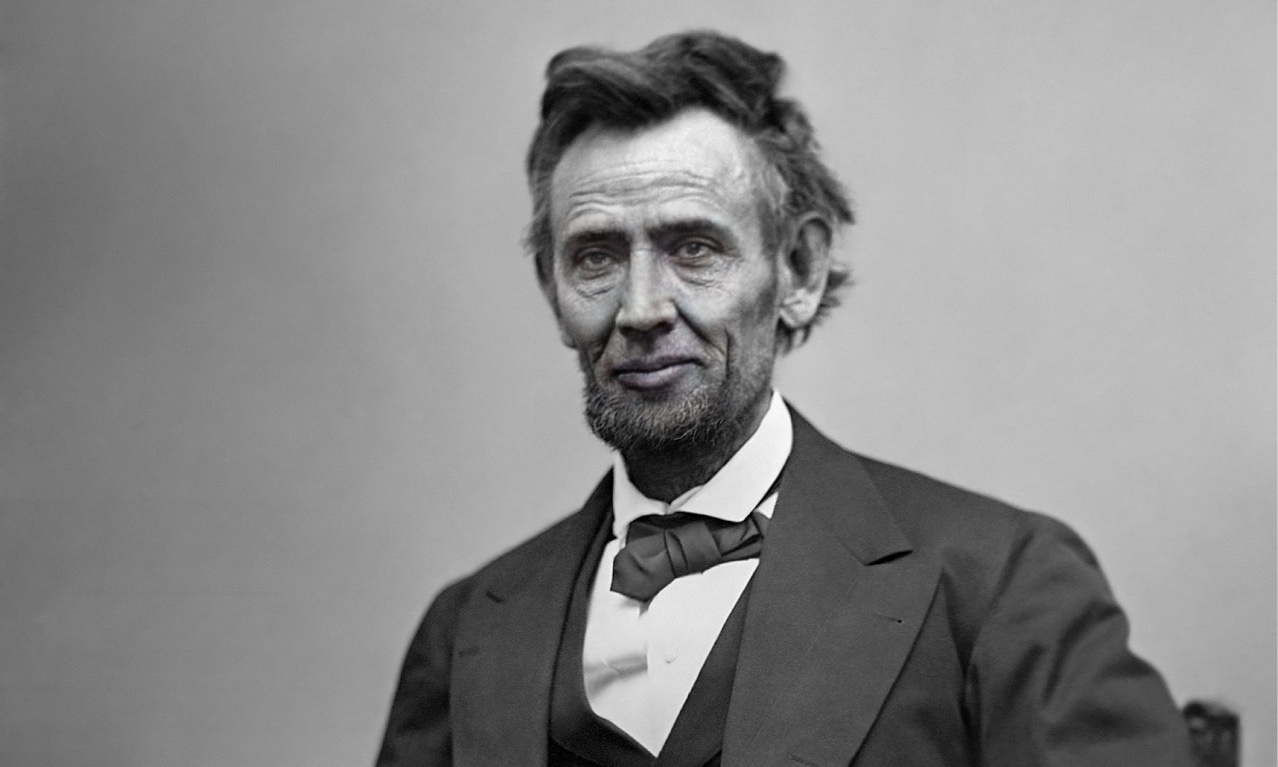

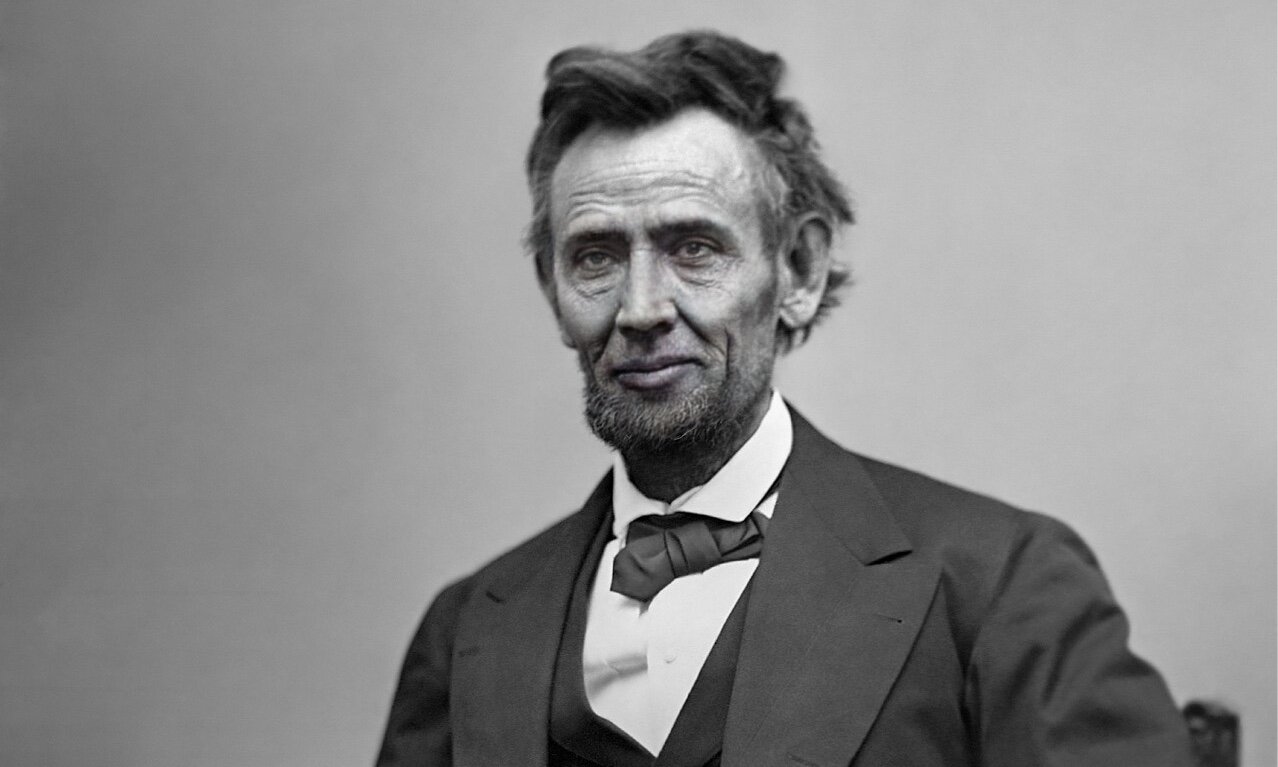

That’s not Lincoln. It’s Nicolas Cage. This photo could be animated and speaking, all in a matter of minutes, thanks to machine learning.

In the age of Fake News, what are DeepFakes?

Deepfake technology is an image or video generated to replicate — fairly convincingly — a person doing or saying something they never said or did. Deepfakes have been around for a while in various capacities. Recently, however, they have become more pervasive. As the machine learning technologies used to make deepfakes have advanced, the barrier to entry for using the technologies has dropped dramatically.

Most of our developer audience will be intimately familiar with machine learning as a concept. Some form of machine learning is in use in almost every app, or service currently coming to market. For those who aren’t familiar with machine learning? Put simply, it’s the ability of a computer system to draw a conclusion, or make a prediction, all on its own, based on preloaded information and patterns. This “training data,” as it is commonly called, are massive data sets relevant to the topic of the software’s work. Machine learning can be used for everything and anything. Weather forecasting, predicting sports outcomes, showing you relevant posts or ads, medical diagnoses, you name it. Media like audio, video, and photography are just another form of digital information. Photo recognition software powered by machine learning can accurately fill in gaps, create photo-realistic animations, and even imitate voices.

There are numerous websites where you can upload multiple videos, decide what you want the final product to look like, pay a nominal fee, and a few hours later get a downloadable product. What started as a simple FaceSwap has now become a variety of automated manipulation techniques that can create a variety of end products with significant impacts. Deepfakes can be used for completely innocent purposes such as SnapChat filters, altering the appearance of movement for better artistic representation, or my personal favorite — resurrecting the likeness of an individual for educational purposes.

Not all deepfakes, however, are pure intentioned. Some concerns include lower barriers to perpetrate fraud, phishing, blackmail, revenge porn, or a variety of other abusive behaviors. In the wrong hands, deepfakes can be used to cause social instability or launch chaos for personal gain. Take for example a deepfake video where an executive of a publicly-traded company makes damaging comments about the company. The company’s stock price plummets due to investors believing its authenticity or even the possibility of its authenticity. Millions of people lose money before the truth is revealed. The damage is possibly irreversible. Meanwhile, the creator of that deepfake knows the chaos they are about to cause and profits off the instability.

Then there are the political applications…

In the heat of an election, deepfakes can be used to spread lies in the information wars between political parties and further divide the American public (“You said that!” “No I didn’t!”).

Congress even recently caught wind of the problems this could cause when they realized the impact deepfakes had after many were easily swayed into believing that a highly edited video of Speaker Pelosi that went viral the other week was true when it was not. While multiple sources were easily able to jump on this instance being a dupe, what if the contents of a politician’s statement had a greater impact with a tighter timeline – like on election day? Or what if a lawmaker thinks a deepfake is real and acts upon it?

An individual with bad intentions could make a deepfake of a politician saying something inflammatory to prompt a reaction from another government or lead to heightened tensions or even all-out conflict. These ‘fakes’ can have real, tangible, and serious consequences if misused.

What’s the Need for Regulation? / Potential Action Plans

Washington is slowly realizing that with the prevalence of this technology, great harm is possible. Aside from generally establishing ethical standards for AI, both the public and private sectors have been considering taking deepfakes head-on.

A bipartisan bill has been gaining traction on the Hill to support advanced research into security risks surrounding deepfakes. Rep. Jim Lucas (R-IN) further brought in the threat of deepfakes during the recent House Financial Services Committee. Lucas expressed concern that media “can be altered to make it nearly impossible to distinguish between what is real and what is not.” Further suggestions from members have included requirements for watermarks on deepfakes to indicate that they are parodies. The watermark solution may work for some programs that are designed for entertainment purposes, however, they are not a holistic solution for the nefarious deepfakes — which are obviously the ones that are the most concerning.

Platforms have recently been looking to create policies to help them detect and moderate deepfakes. Facebook, Microsoft, and a number of other partners are also hosting a Deepfake Detection Challenge to help build out with top talent the mechanisms to better detect and assess AI-generated content, as well as help create more informed policy. Many platforms have already put sizable amounts of money and resources to rooting out these fakes as they realize the issues they may cause. The timing of which is especially poignant in light of potential revisions to Section 230 of the Communications Decency Act.

So many critical functions are based on some level of trust in the system. While I love a good conspiracy theory (who doesn’t?), an increased prevalence of deepfakes erodes public confidence, especially on social media. With platforms taking efforts to curb and identify deepfakes, it is crucial to raise public awareness. If we do not promote public education of deepfake

s’ existence and broad capabilities then individuals everywhere may fall for them. Technology has evolved; so too must our skepticism of everything we see on the internet.

With platforms pouring resources into detection methods for these products, the ideal outcome would be a system where a platform was able to analyze a deepfake upon upload. This would allow for the platforms to be more confident in taking responsibility for the content they support, and the content moderation process for the platforms themselves more streamlined. Additionally, users would feel more confident in the platform’s ability to provide less biased moderation standards.

If the content was fake, but otherwise fit for viewing per the websites content policies, then the platform would be able to indicate the legitimacy of the video right on it for consumers with a “likely altered” (versus a ”likely verified”) tag. Platforms would need to address their policies regarding policing material if the content was fake, especially in cases where the deepfake could be particularly damaging to the person impersonated.

While it is necessary for the government to take steps to protect our nation from deepfakes being weaponized, the private sector is currently leading the charge for discernable solutions to the problems that our fast-growing technology has created. We will continue to follow issues surrounding deepfakes and related Artificial Intelligence issues as they progress in Washington, DC, and Brussels.